I recently ran a virtual retrospective session at the excellent SPA 2021 titled “Agile Delivery and Data Science: A Marriage of Inconvenience?” about agile deliveries which include some kind of data science component. I decided to submit the session proposal as over the last few years I’ve observed some repeating pain points whilst working on agile data platforms, and from conversations with others outside our organisation, anecdotally these did not seem to have been unique to us. I was keen to understand how widespread these issues might be, and to share and learn from the participants which solutions they had tried, and what had succeeded and failed in which contexts. Given the limited set of captured data points, the following is clearly not conclusive. However hopefully it might still be of some help to others experiencing similar challenges.

Using the recently discovered and excellent retrotool, the retrospective was structured in two parts: the first focusing on pain points, and the second focusing on what attempted solutions had worked and what had not worked (unfortunately the final discussion ended up being a bit pushed for time, so apologies to the participants for not managing that better). In reviewing the points raised I then tried to structure the resulting themes in an “outside-in” manner, starting with the customer and then working back up the delivery dependency tree to the more technical delivery concerns. The outputs were as follows.

Pain Points

Customer Identity

The Data Mesh architectural pattern has recently gained a lot of popularity as an adaptable and scalable way of designing data platforms. Having worked on knowledge graph systems using domain-driven design for many years now, I’m a pretty strong advocate of the data mesh principles of domain ownership and data products. Data Mesh “solves” the problem of how to work with data scientists by treating them as the customer. That’s a neat trick, but it only really works where that model reflects the actual delivery dependencies – i.e. in the instance that there are no inbound dependencies from the outputs of data science back into product delivery. In my experience, that most typically applies in enterprise BI scenarios where organisations are becoming more data-driven and need to aggregate data emitted from services and functions across their value streams. In such situations there is generally a unidirectional dependency from the data services out to the data science teams, and the data mesh approach works nicely.

In other contexts however, it doesn’t work so well. Firstly, in situations where data science outputs are an integral part of the product offering e.g. where you are serving predictive or prescriptive analytics to external paying users, then data scientists can’t just be treated as “the customer”. They need to be integrated into the cross-functional delivery team just like everybody else, otherwise the dynamics of shipping product features can become severely disrupted. Secondly, in situations where data science outputs are being used to reconfigure and scale existing manual processes then again the primary customers aren’t the data scientists. Instead they are typically the impacted workers, who most often see their roles changing from task execution to process configuration, quality assurance and training corpus curation.

Product Ownership

The role of agile product owner demands a skillset that is reasonably transferable into the data product owner role in data mesh enterprise BI scenarios. However in contexts where data science outputs are an integral part of the product offering, the data product owner role generally requires domain expertise orders of magnitude deeper than for typical agile product ownership roles. In sectors like healthcare and life sciences, understanding a.) which algorithms could best help solve the problems your customers/users care about most, and b.) the limitations and assumptions on which those algorithms are based which will determine the contextual boundaries of their usefulness – such critical product concerns are only addressable with deep domain expertise. Such specialists almost certainly don’t have additional skills in agile product management (or production-quality software development for that matter either – more on this below).

Stakeholder Expectation Management

Due to a general lack of understanding about machine learning amongst many stakeholders, there are frequently unrealistic expectations about what is achievable both in terms of algorithm execution speed and precision/recall. This can be compounded by the challenges of delivering trained statistical models iteratively, where often the combination of poor quality initial training data and a lack of target hyperparameter ranges can result in early releases with relatively poor ROC curves or F1 scores. Such deliverables combined with a lack of stakeholder understanding about technical constraints can have a compounding effect on undermining trust in the delivery team.

Skills

Simon Wardley has developed his excellent Pioneer/Settler/Town Planner model for describing the differing skill sets and methods which are appropriate to the various stages in the evolution of technology. It offers a very clear explanation for one of the most common friction points in agile data science programs, namely that it pulls Pioneer-style data scientists most familiar with research work using Jupyter notebooks and unused to TDD or CI/CD practices, plus Town Planner-style data engineers used to back office data warehouse ETL work and/or data lake ingest jobs, into Settler-style cross-functional agile delivery teams which aspire towards generalising specialist skill sets rather narrow specialisations. This can manifest both during development via substantially different dev/test workflows, and during release via the absence of due consideration for operational concerns such as access control, scaling/performance (via a preference for complex, state-of-the-art frameworks over simplicity) and resilient data backup procedures.

Fractured Domain Model

Where data scientists aren’t fully integrated into delivery teams, a common observation is the introduction of domain model fractures or artificial boundaries in the implementation between “data science code” and “software engineering code”. Most typically this occurs when data science teams develop blackbox containerised algorithms which they then hand over the wall to the delivery team for deployment. It is quite common for different algorithms in the same problem space to share first or second order aggregates which are calculated in the early part of the execution sequence. By treating the whole algorithm as a blackbox, these lower level aggregates are needlessly recalculated time and time again, impacting performance, scalability and caching. Also, any variation in the formulae used to calculate these lower order aggregates remains hidden. Whilst the resulting differences in outputs might be well within the error margins of any prediction being generated, where such data inconsistencies become visible to end users it can undermine their trust in the product.

Technical Debt

Agile data science programs seem to be particularly prone to incurring payback on existing legacy technical debt in the very early stages of delivery. Often this is due to the presence of data lakes, which historically swapped the upfront data cleansing and normalisation costs of data warehousing for a just-in-time approach driven by use case demand. Whilst reasonable enough from a cost optimisation perspective, this approach unfortunately violates Postel’s Law of being “liberal in what you accept and conservative in what you send” (or at least implies a very substantial lag between data being accepted and then sent onwards). Migrating data from a data lake into a modern collection of microservice data products almost always requires those cleansing and normalisation debts to be paid off again. The other factor is that predictive analysis normally highlights any data quality issues which may previously have lurked beneath your radar, as their impact gets amplified once they are used to train for forecasting.

What Didn’t Work Well

Treating Data Science as One Thing

Thinking about data science from a Wardley Mapping point of view, it quickly becomes clear that the discipline in fact covers a wide range of skills and activities: from true R&D on innovative machine learning architectures (genesis), to statistical software engineering and integration of existing model frameworks (product), through to data pipeline engineering (commodity). Treating all of these as a single activity and skillset is the underlying cause of many delivery problems.

Data Science as an upstream R&D function

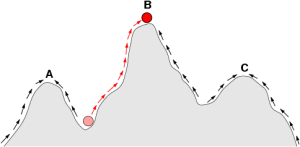

The most common manifestation of “treating data science as one thing” is to move all the data scientists into an upstream R&D function. If what they are working on is in fact “product” phase deliverables, the unavoidable result is a shift into a more waterfall-style delivery model which will fundamentally impact your agile delivery capabilities. Conversely if they are working on true “genesis” phase innovation work, it begs the question of whether strategically this really makes sense for many organisations. There has been a massive recruiting push for data scientists recently, many of whom have been building machine learning capabilities which now exist as cloud services or else will do very shortly as the technology matures. Unless you work for a big tech company or else your organisation has very niche requirements and differentiators, it is frequently unlikely that you will really need a data science R&D capability going forwards.

Additional team between Data Science and Software Engineering

Some organisations try to keep data scientists in ringfenced teams and then add an interface layer between them and software engineering via a team with roles named something like “machine learning engineer” or similar. The fundamental problem with this approach is that it is trying to solve a dependency problem by adding more dependencies, therefore it is pretty much guaranteed not to work.

What Worked Well

Integrating Data Scientists into Cross-functional Teams

The best way to deal with dependency problems is to restructure your delivery process so that its boundaries better reflect the flow of value. Unless you have outbound-only dependencies into your data science teams, that means integrating “product” phase data science into your cross-functional delivery teams. Pairing data scientists with software engineers, TDDing your algorithm invocation code, and adding data scientists into Three Amigos (Four Amigos?) feature discussion/planning might have some early impact on team velocity whilst unfamiliar skills are shared and learned, however it rapidly pays off in terms of maintaining a high quality agile product delivery capability.

Domain Expert as Product Owner

For complex domains which require deep subject matter expertise, it is generally better to pair a domain expert with an agile coach to serve as product owner rather than trying to cross-train an experienced agile PO. A detailed understanding of your customers’ problems should always trump knowledge of delivery methodologies, otherwise you simply risk the common failing of building the wrong thing in the right way.

Use of Demonstrator Apps/Test Harnesses to Show Progress

Interestingly, participants found that using demonstrator apps, test harnesses and tools such as AWS Quicksight or Google Data Studio were a lot more effective for demonstrating early progress to stakeholders compared to early software product releases. One reason for this is that poor algorithm performance viewed in the context of the end product tended to generate panic and create blindness in stakeholders, whereas they were a lot more open to explanations of current constraints when viewing an algorithm being executed in isolation.

Design for Incremental Automation over Big-Bang

Finally, the agile principles of incremental, adaptive delivery should be applied as much to process automation via machine learning as they are to the product delivery itself. The extent to which it is optimally cost effective to augment human processes with machine assistance is generally not something which can be decided upfront. For this reason, gambling everything on 100% full automation creates a high risk of failure or else major cost overruns. Instead it is much safer to iterate from manual to increasingly automated processes, designing your systems around the sources of variability until the point of acceptable performance is achieved. As an example, a machine learning service which classifies research literature may have results which vary by subject area (e.g. it might be better at classifying drug interventions than behavioural interventions) and source (e.g. it might perform better against well structured documents from one source compared to other documents from another source). In such circumstances, designing quality assurance tools that support sample rate checks which can be varied by subject area and data source is much more likely to succeed compared to a delivery which naively assumes machine learning can solve everything within cost effective limits.

Thanks

I would like to thank all the participants who attended the retrospective session, and in particular Paul Shannon and Immo Huneke for their contributions.